Is cortical connectivity optimized for storing information?

Is brain optimal for information storage?

- Topic of interest

- Two hypotheses of information storage in the brain

- Motivation from models to connection style

- What are the network connections when the number of learned patterns reaches maximum?

- Take-home-message and discussion

- References

Here is the Original and Updated Notes.

Topic of interest

Intuition: brain should be fully connected, for its highly condensed property and interaction of collaterals.

Empirical evidence: brain is sparsely connected, with a connection probability of $10\%$ in neocortex.

Question concerned: is the sparse connection an optimal setting (for information storage)?

Two hypotheses of information storage in the brain

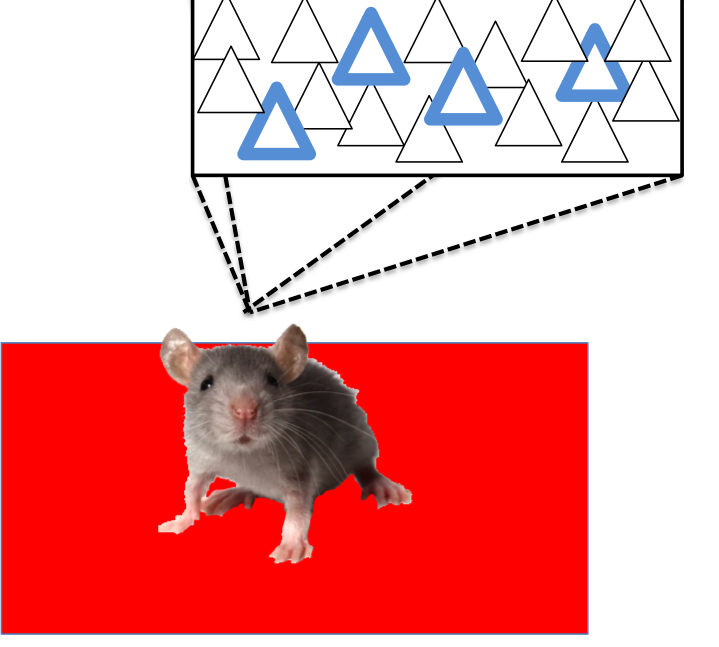

attractor state in a recurrent network

A small group of neurons have higher firing rates compared to background activity.

This is also a widely-accepted mechanism in feedback network (recurrent network).

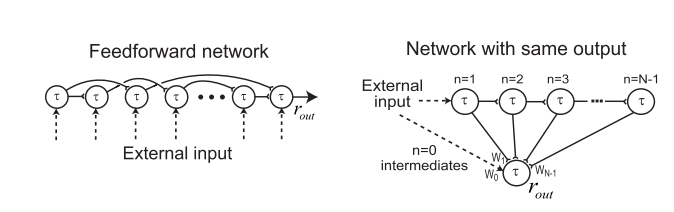

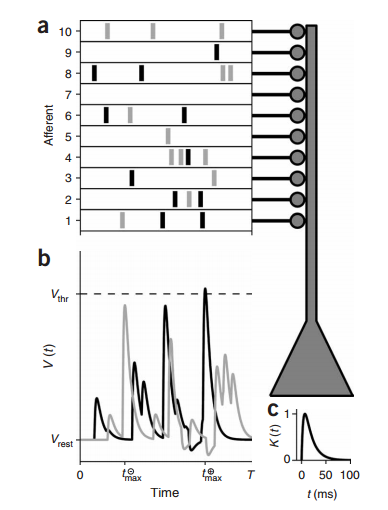

firing sequences in a feedforward network

It is also confirmed that the signal could be stored in the network in the form of firing sequences of a few neurons in a feedforward network.

The feedforward network could present (or save) information, because the neuron (or unit network) at different stage has different response time constant.

Motivation from models to connection style

Current numerical research usually starts from a specific connection pattern, It is unclear whether cortical connectivity obeys the rules postulated by these models.

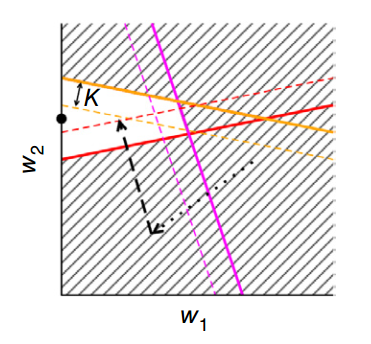

learning algorithm

So current study started from a possibly fully connection state, using perceptron learning algorithm to classify total inputs (determine outputs). Patterns to be learned determined the synaptic weights space.

network setting

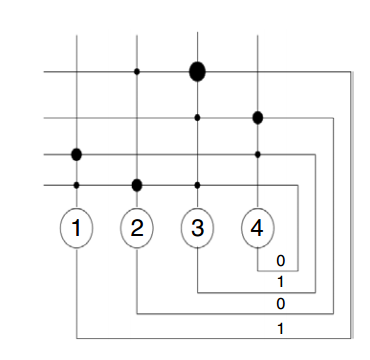

simplified neuron model: binary ($S_j = 1$ is active, $S_j = 0$ is inactive)

neuron type: excitatory neurons and inhibitory neurons.

connections: Inhibitory neurons are fully connected and not touched during the learning process. Excitatory neurons are possibly fully connected.

learned patterns and synaptic weights

In the current study, different firing patterns are randomly generated and presented to the network, and the network has to learn (or store) the pattern with sets of synaptic weights.

synaptic integration and perceptron classification

Neurons are connected with different strength, $w_{ij}$ is the synaptic weight from neuron $j$ to neuron $i$.

The synaptic inputs to each neuron is summed and compared to a threshold $T$.

where $S_j$ is the activity of input neuron and $w_{ij}$ is the corresponding weight.

If $\sum_\mathrm{1,…,N}w_{ij}S_j(t)>T+K$, fire;

if $\sum_\mathrm{1,…,N}w_{ij}S_j(t)<T-K$, silence.

What are the network connections when the number of learned patterns reaches maximum?

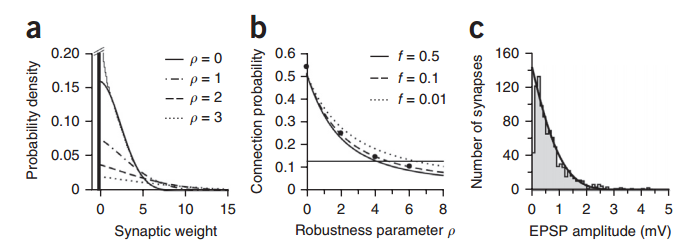

dominant weak synapses

In the synaptic weight distribution, there are more weak synapses and less strong synapses, which also fits the experimental evidence. (Simulation results absed on attractor state hypothesis.)

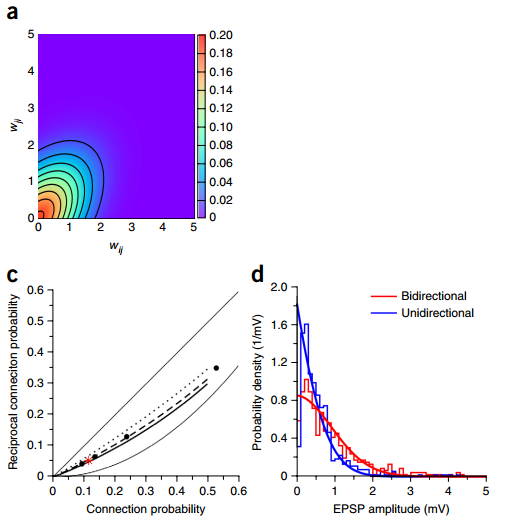

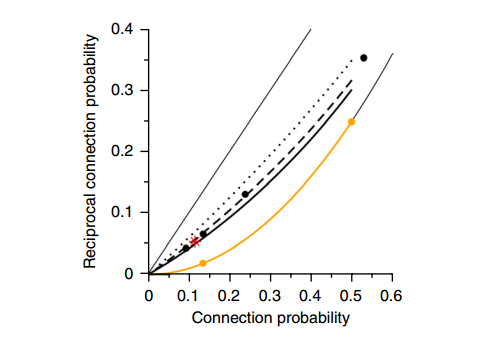

reciprocal connections are stronger

The stronger connections are reciprocal connections. The probability of reciprocal connections are above the square of connection probability. (Simulation results absed on attractor state hypothesis.)

reciprocal connections in sequence hypothesis

The same phenomenon of higher reciprocal connection probability was also observed when simulating the sequence hypothesis of information storage. But the probability equals to the squared probability of connection probability.

Take-home-message and discussion

Conclusion The sparely connected network with stronger reciprocal connections make the brain efficient for information storage.

Discussion

How to realize the function of memory in ANN?

Use tempotron learning algorithm?

The function of “potential” (zero) synapses.

References

picture of memory engram is modified from this one

Goldman MS. Memory without feedback in a neural network. Neuron. 2009 Feb 26;61(4):621-34.